Machine learning models are transforming industries. From healthcare to finance, businesses rely on predictive systems trained on massive datasets. However, as models become more powerful, new security risks emerge. One of the most concerning threats is data inversion attacks.

These attacks expose sensitive information hidden inside trained models. Even if raw data is never shared publicly, attackers can still reconstruct private details. This creates serious privacy and compliance risks for organizations.

In this article, we will explore what data inversion attacks are, how they work, why they matter, and how to defend against them.

Understanding Data Inversion Attacks

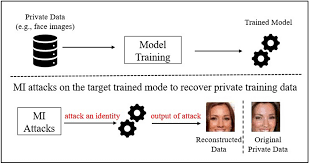

Data inversion attacks occur when an attacker attempts to reconstruct training data by exploiting access to a machine learning model. Instead of directly stealing the dataset, the attacker queries the model and analyzes its outputs.

The goal is to reverse-engineer sensitive information.

For example:

- Reconstructing facial images from a facial recognition system

- Recovering medical attributes from a health prediction model

- Extracting personal data from financial risk models

Even if the model only provides prediction probabilities, attackers can use mathematical optimization techniques to estimate original inputs.

How Data Inversion Attacks Work

Model Access and Querying

Attackers first gain access to a trained model. This could be:

- Public API endpoints

- SaaS platforms

- Leaked internal systems

- Open-source deployed models

They then submit carefully crafted queries.

Output Analysis

The attacker studies prediction probabilities rather than just labels. These probabilities contain more information than most developers realize.

For example:

- Confidence scores

- Class probabilities

- Gradients (in white-box scenarios)

Using optimization algorithms, attackers iteratively adjust input guesses until the output matches target patterns.

Reconstruction of Sensitive Data

Over time, the attacker refines their input to reconstruct:

- Approximate images

- Text sequences

- Structured personal data

This process does not require direct access to training data.

Why Data Inversion Attacks Are Dangerous

Violation of Privacy

Organizations assume that once data is trained into a model, it becomes safe. However, inversion attacks show that models can memorize and leak training information.

This is especially dangerous for:

- Healthcare systems

- Biometric systems

- Financial risk engines

- Identity verification platforms

Regulatory Risks

Data protection regulations such as GDPR and other global privacy laws require strict protection of personal information. If a model leaks personal data indirectly, companies may still be liable.

Business Reputation Damage

A successful inversion attack can damage trust. Customers expect their information to remain confidential. Even indirect leakage can harm brand credibility.

Real-World Scenarios

Facial Recognition Systems

Researchers have demonstrated reconstruction of recognizable faces from trained models. If a company stores biometric data and exposes prediction APIs, attackers could reconstruct user identities.

Healthcare Predictive Models

Medical models trained on patient records can leak sensitive attributes. An attacker could infer health conditions by carefully analyzing outputs.

Language Models

Large language models trained on proprietary documents may reveal memorized fragments of sensitive content.

Relationship Between Data Inversion and Other Attacks

Data inversion is often confused with other machine learning attacks. However, there are important differences.

Model Extraction Attacks

These aim to replicate the model itself.

Membership Inference Attacks

These determine whether a specific data point was used in training.

Data Inversion Attacks

These reconstruct actual training data samples.

While distinct, these attacks often overlap in techniques.

Role of Data Engineering in Security

Strong data pipelines and secure model deployment practices reduce risks significantly. Organizations offering robust data integration engineering services help ensure that data flows, storage, and model environments follow strict governance standards. Secure architecture limits exposure and reduces opportunities for attackers to exploit model outputs.

Companies such as brickclay.com emphasize structured data management strategies that align machine learning deployment with enterprise-grade security controls. Proper engineering foundations are critical in minimizing privacy leakage risks.

Defense Strategies Against Data Inversion Attacks

Protecting machine learning systems requires layered defenses.

Differential Privacy

Differential privacy adds controlled noise to training processes. This prevents models from memorizing specific training examples.

Benefits:

- Reduces leakage risk

- Provides mathematical privacy guarantees

- Suitable for sensitive datasets

Output Limitation

Restrict model outputs.

Instead of returning full probability distributions:

- Return only predicted labels

- Limit confidence scores

- Apply threshold-based outputs

Less information means fewer opportunities for attackers.

Regularization Techniques

Overfitting increases leakage risk. Proper regularization reduces model memorization.

Techniques include:

- Dropout

- Weight decay

- Early stopping

Access Control and Monitoring

Implement:

- API rate limiting

- Query monitoring

- Anomaly detection

Repeated structured queries may signal inversion attempts.

Secure Model Deployment

Avoid exposing internal gradients or white-box access in production. Use model wrappers and secure serving infrastructure.

Best Practices for Organizations

Conduct Security Audits

Perform adversarial testing to evaluate whether your models leak information.

Minimize Data Retention

Only collect and store necessary data. Less data reduces exposure.

Encrypt Sensitive Data

Ensure encryption at rest and in transit across all pipelines.

Implement Governance Policies

Create clear documentation around:

- Data collection

- Model training

- Deployment practices

- Monitoring procedures

Security must be integrated from development to deployment.

The Future of Privacy in Machine Learning

As AI adoption increases, attackers will develop more advanced techniques. Data inversion attacks will evolve with improvements in optimization and model interpretability.

Organizations must move beyond traditional cybersecurity approaches. Machine learning security requires specialized knowledge and proactive strategies.

Privacy-preserving AI will become a competitive advantage. Businesses that invest early in secure architectures and privacy-enhancing technologies will gain customer trust and regulatory confidence.

Conclusion

Data inversion attacks expose a hidden vulnerability in machine learning systems. Even without direct access to training datasets, attackers can reconstruct sensitive information through intelligent querying.

This threat highlights an important lesson: models are not just algorithms. They are containers of data.

To stay protected, organizations must implement differential privacy, limit model outputs, strengthen access controls, and invest in secure data engineering practices. Proactive defense is far more effective than reactive damage control.